Interpreting Potts and Transformer Protein Models Through the Lens of Simplified Attention

Original Paper link - bhattacharya.pdf (stanford.edu)

Highlights of the Paper

- Argue that attention captures real properties of protein family data. $\rightarrow$ leading to a principled model of protein interactions.

- Introduce an energy-based attention layer, factored attention which recovers a Potts model.

- Contrast Potts Models and Transformers.

- Shows that Transformer leverages hierarchical signals in protein family databases that is not captured in single layer models.

Introduction/Background

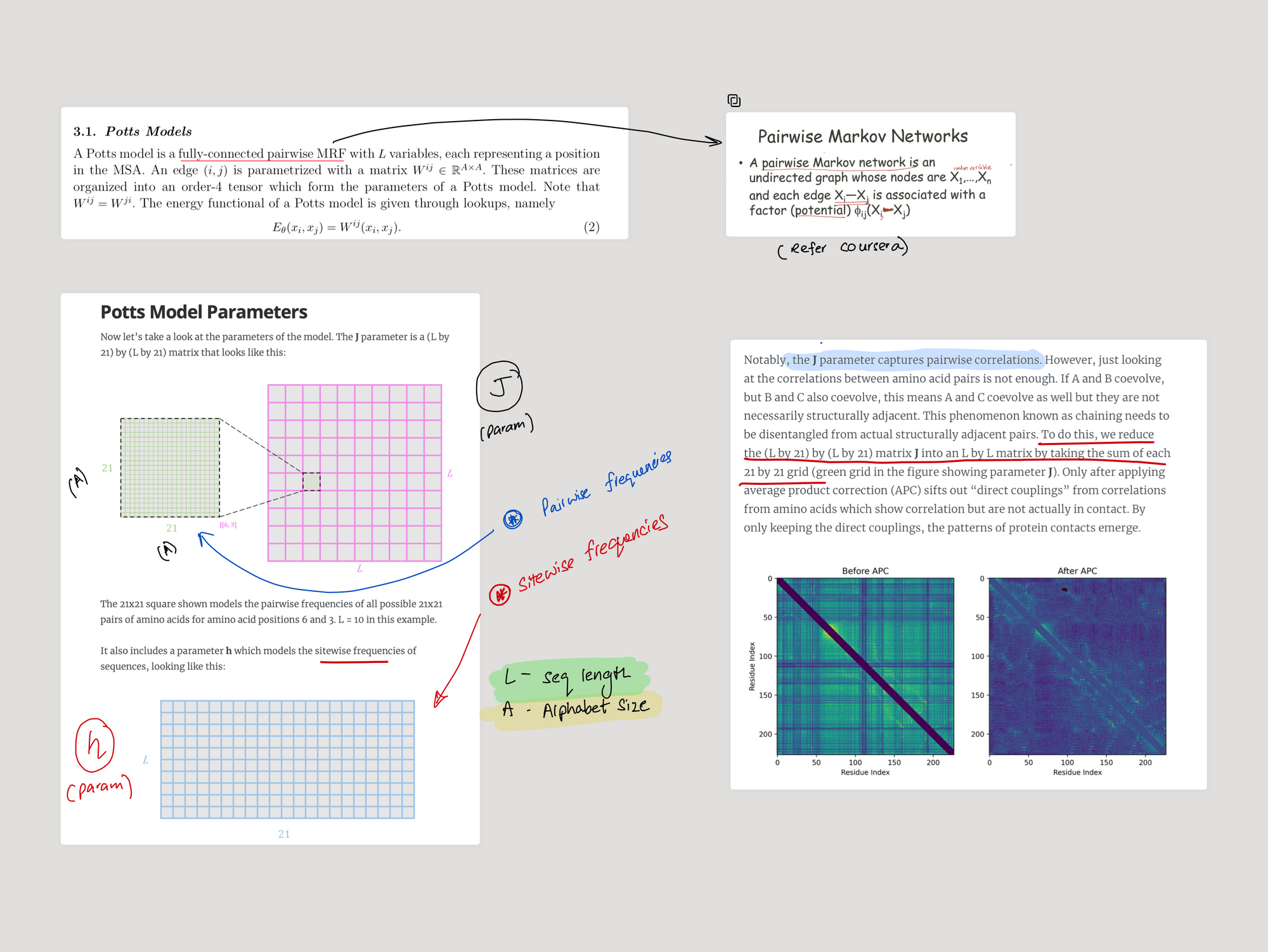

Potts Model

- This is a kind of$^\star$ a Markov Random Field which is a popular method for unsupervised protein contact prediction.

- MRF based methods can capture statistical information about co-evolving positions.

- There is a great illustrated example of a Potts Model in Tianyu's Blog.