Introduction to Adam Optimizer & advancements leading to it

This is a presentation I did in one of the Journal Club meetings in the computational imaging group at Harvard (wadduwagelab).

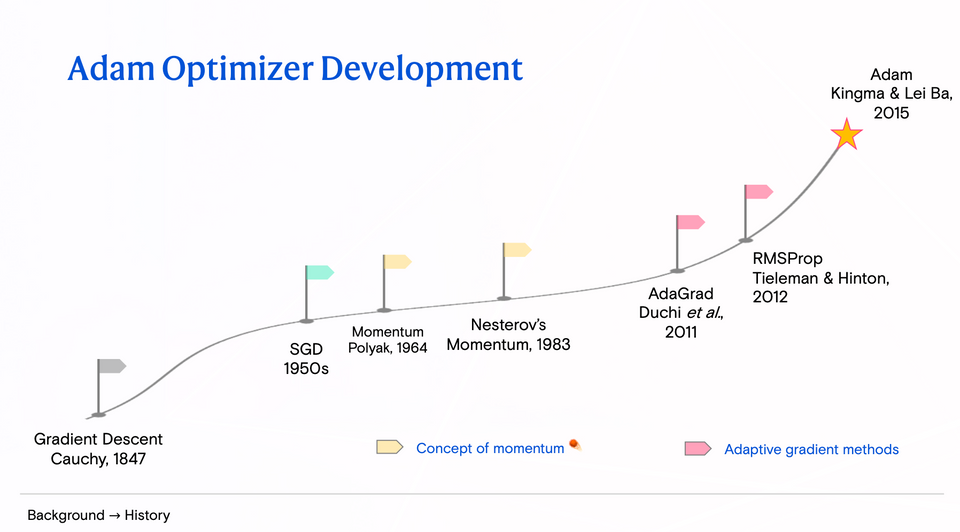

I made a presentation on how gradient (full batch) descent [1] has evolved into the Adam optimizer [2] by tackling the optimization challenges that exist.

You can download the slides below. 👇

Adam-Review-2.pdf