Want to track ML experiments without sending logs to the cloud?

There might be a situation when you are engaging in a research and your employer/research group explicitly mentions not to use certain 3rd party tools for dataset saving, experiment tracking, artifact saving. What options do you have now?

Through this short writeup I'm going to introduce a handy opensource tool which you can use to track experiments locally which is,

LabML & LabML Dashboard

Let's get started,

Step 1 - Installing LabML & LabML Dashboard

Simply use pip to install.

pip install labml

pip install labml-dashboardStep 2 - Cloning a starter repo to tryout

I have included two jupyter notebooks which has sample codes. Please note that the .labml.yaml file has a parameter (web_api) which I have set to '' . This means data won't be send to the cloud.

git clone https://github.com/ramithuh/labml-sample.gitStep 3 - Running a Tensorflow or Pytorch sample code

You can open the jupyter notebook file and execute the code, then from your terminal go to that folder's directory and launch labml dashboard

cd labml-sample

labml dashboard

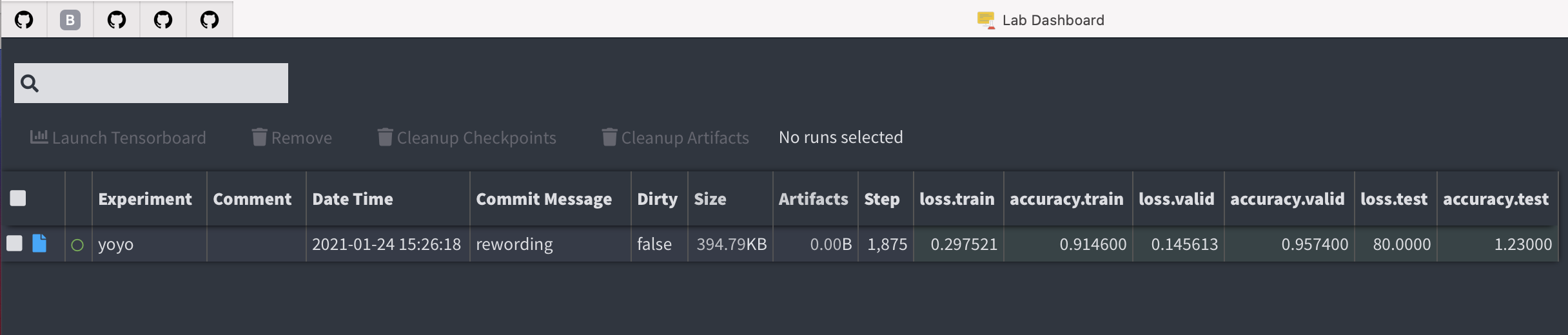

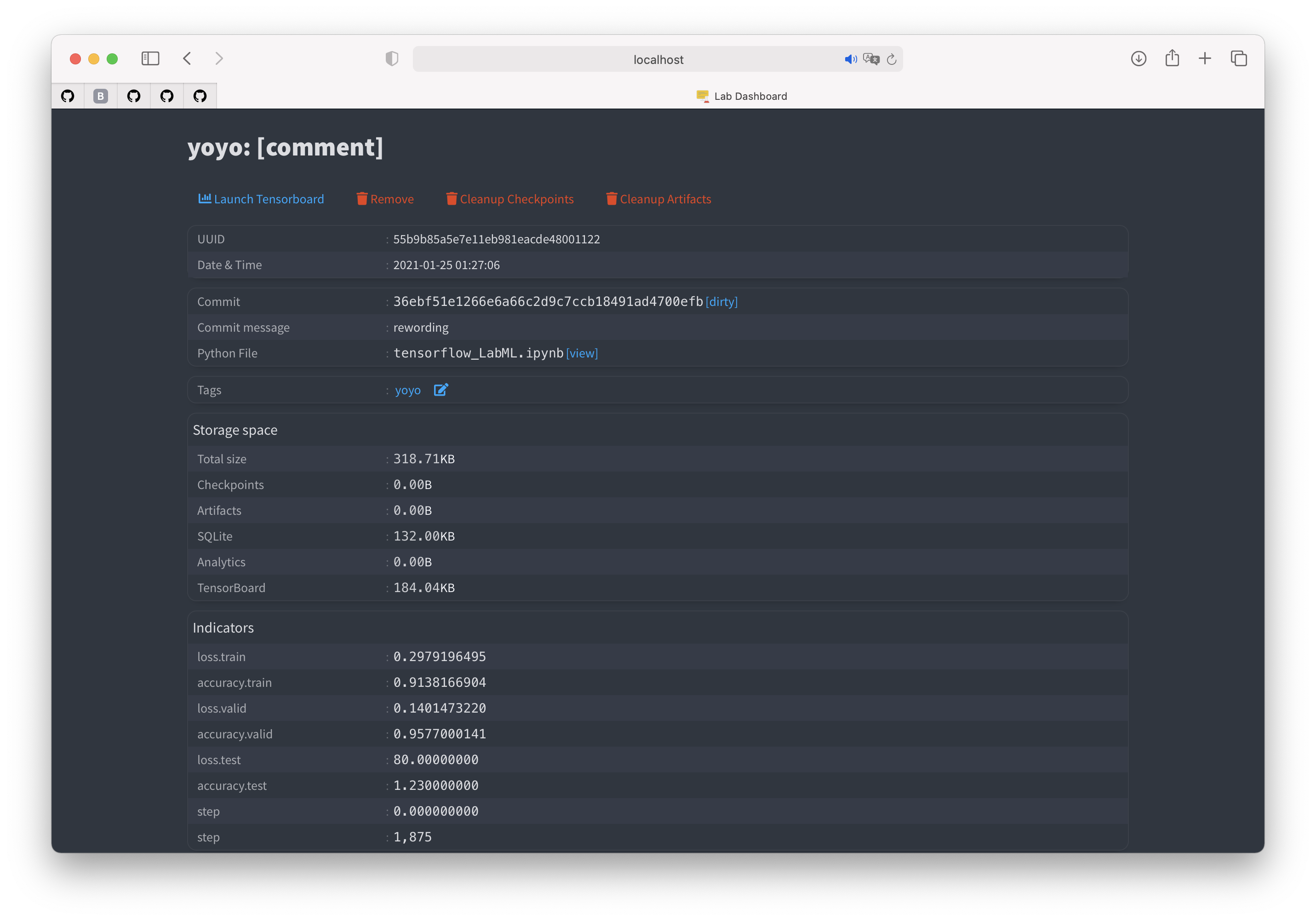

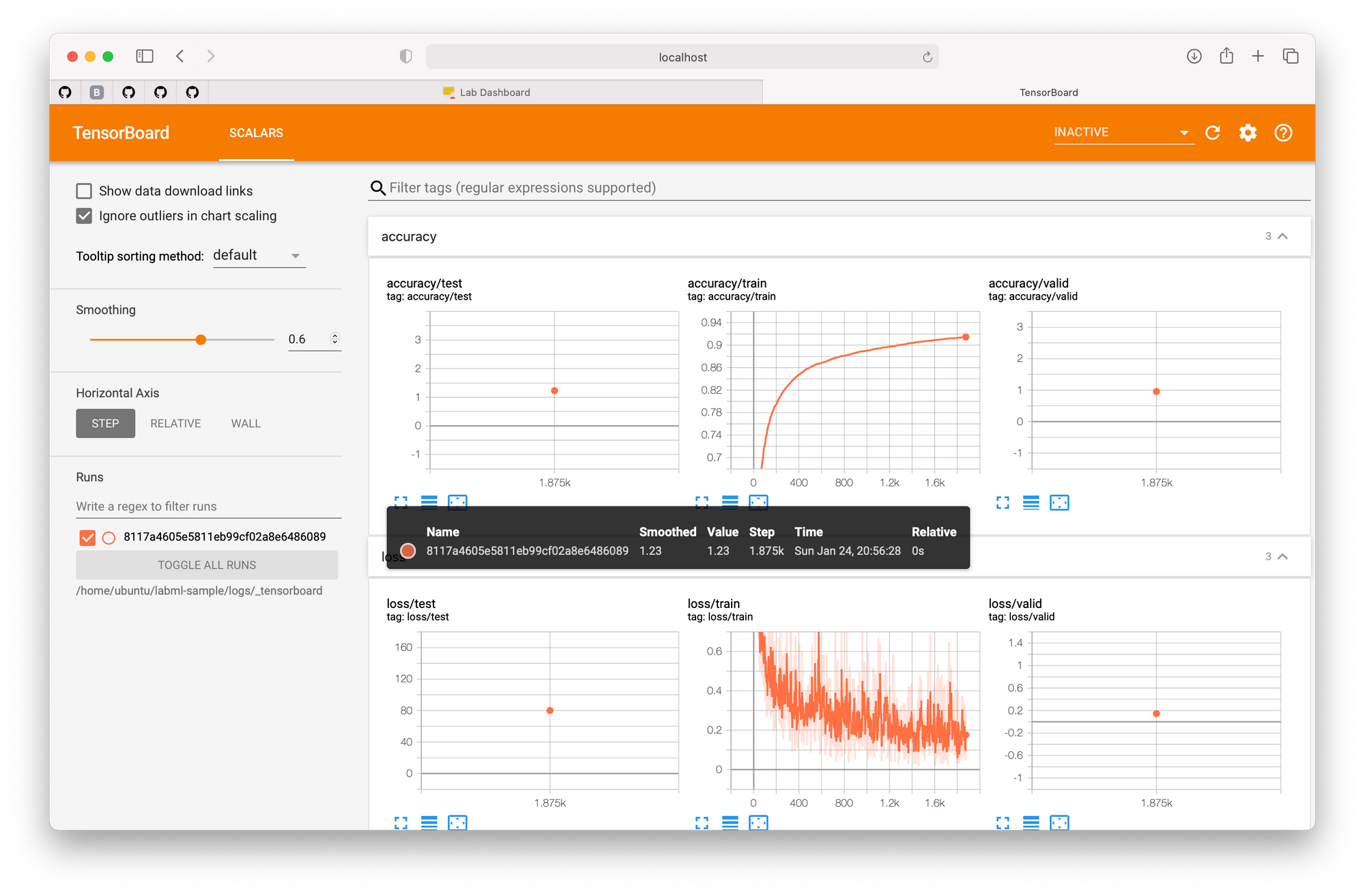

This will open a browser window with your experiment summary.

Please refer to the official documentation if you would like to make use of the full capabilities of LabML.

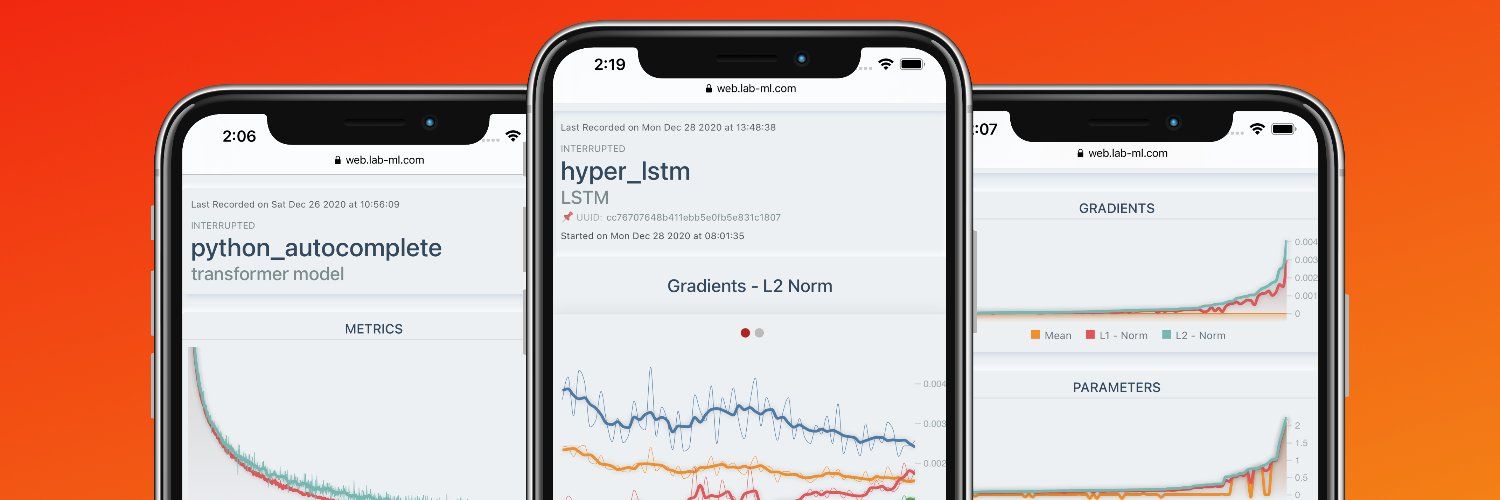

While monitoring experiments locally is only one aspect of LabML, the key focus of it is to monitor experiments through a mobile phone. They even have deep learning paper implementions tutorials!

If you want to learn more, check out their latest work shared through the official twitter handle @labmlai

Update (2021/01/25): @labmlai replied to my tweet, Apparently the nice mobile optimized version (LabML App) can be hosted locally as well. If I tryout setting it up, I'll update the instructions here.

You can also host https://t.co/mXly4yMyf6 locally. The setup is a little complex and we are working on making it simpler

— LabML (@labmlai) January 25, 2021

If you want to see how I setup LabML locally, watch the terminal recording below